IMPORTANT DATES

August 24th, 2020

Paper Submission

September 4th, 2020

Paper Submission

September 24th, 2020

Acceptance Notification

October 8th, 2020

Submission of Final

Paper & Poster

October 25th - November 25th 2020

Workshop @ IROS On-Demand

Welcome to the 3rd Workshop on Proximity Perception in Robotics at IROS 2020:

Towards Multi-Modal Cognition

Starting 25th of October, IROS On-Demand 2020

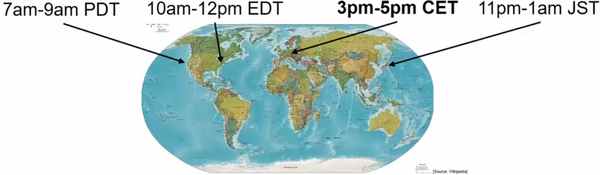

Panel Discussion + Q&A for Invited Speakers, October 28th:

3pm-5pm CET via ZOOM:

PhD-Forum, October 29th, 3pm-5pm CET via ZOOM:

Attention: As announced by the IROS 2020 organizing committee, the conference will not take place as an in-person event and will be moved to an on-demand online format. Our workshop will therefore also move to this online format. The invited talks will be available on-demand and some parts of the workshop will be held live, such as the panel discussion and the PhD-Forum. We plan to add recordings of the live-events to the on-demand platform later on. Please see the program for details.

In its 3rd edition, this workshop aims at exploring and showing the potential that proximity perception has for cognitive robotics. Active proximity perception has great potential for Human-Robot Interaction (HRI) as well as for modeling objects and the environment in a multi-modal context. Proximity perception is complementary to vision and touch and today the technology is mature enough to be deployed alongside cameras and tactile sensors. For example, many researchers have already successfully addressed the challenge of multi-modal skins that include tactile and proximity perception. However, not much research has been directed towards active perception and sensor fusion that includes the proximity modality. To address this, in this workshop we feature experts from multi-modal HRI and visio-haptic perception as well as from the industry, who will foster the discussion with their experience in these domains. At the same time, we expect that their contributions will inspire multi-modal cognitive approaches for proximity perception. Furthermore, we will feature a demo-session that will anchor these ideas to concrete hardware, already available today from both research and industry participants.

- Foster the community of roboticists and hardware developers working in this domain

- Let experts in multi-modal perception and HRI give an account of their experience, to help

- motivate novel cognitive approaches for proximity perception in robotics.

- Continue to bridge to industry via invited industrial talks and demos

- Offer PhD-students a forum for their work through short talks, posters and demos

- Foster scientific collaborations between the participants of the workshop

Mailing-List: see here.

Sponsors

Toyoda Gosei (Homepage)

Blue Danube Robotics (Homepage)

Support

We are grateful for the support of the following organizations:

- IEEE RAS Technical Committee Robotic Hands, Grasping and Manipulation (Homepage)

- IEEE RAS Technical Committee Human-Robot Interaction and Coordination (Homepage)

- IEEE RAS Technical Committee on Haptics (Homepage)

- IEEE RAS Austria Section (Homepage)

Call for Collaboration Proposals

Submission Deadline November 13th 2020

Events like IROS and its workshops are the places where we meet new people, where we are able to exchange ideas and maybe even start new collaborations. In fact, the organization of this workshop started with encounters that first happened at IROS some years ago.

Inspired by this, we want to foster the collaboration between participants of our workshop having all levels of experience (from students to professors, from industry and academia). To bring potential collaborations closer to reality, we are making a call for collaborative projects. The winning proposal will receive a scholarship (1000-1500 USD), which can be used to:

- Support an exchange activity between two laboratories, for instance by funding a plane ticket

- Exchange research materials (covering shipping costs), etc.

- Support the publication fees of an article

- Other uses that foster collaborative activities (need to be specified and justified in the proposal)

To apply, you have to write a small proposal (1 page, for a template click here). The proposals will be evaluated by an independent jury based on:

- Relevance to the workshop topics: proximity sensing, cognitive perception for tactile and proximity sensing, multi-modal sensor fusion and exploration, etc.

- Interdisciplinarity of the team: for instance one expert in hardware, another expert in machine learning

- Description of the use of the price money

iros2020-workshop [at] joanneum.at

Call for Papers

Click here to download the Call for Papers as PDF.

All prospective participants can aim at presenting novel results with posters in the poster and demo session. Additionally, we provide PhD-students the opportunity to present their work through short talks in a forum.

Submission for poster session or PhD forum:

Submission of a paper with a length of 2 pages (maximum 3 pages). Novel ideas/experimental results are required for acceptance of the paper. Click here to download the LaTex-Template (IEEE template with marking for the workshop)If you submit your paper for the PhD forum, please indicate that you are PhD student.

All submissions will be reviewed using a single-blind review process. Submissions must be sent in PDF to:

iros2020-workshop [at] joanneum.at

indicating [IROS 2020 Workshop] in the e-mail subject.

Accepted Papers will be published at the open access repository KITopen under the Creative Commons license (CC BY-NC-ND).

Topics of Interest

- Proximity Sensors

- Multi-Modal Sensors (tactile, shear, vibration, vision, etc.)

- Sensor Calibration

- Robotic Skins (architectures)

- Application Domains for Proximity Sensors

- Human-Robot Interaction

- Human-Robot Collaboration

- Preshaping and Grasping

- Haptic Exploration

- Assistive Robots

- Prosthetics

- Collision Avoidance

- Teleoperation with Proximity Sensing

- Intuitive Robot Programming, Human-Robot Interface

- Multi-Modal Control

- Sensor Fusion

- Underwater Robotics

- Bridging from Tactile Perception to Proximity Perception

- Bio-Inspired Robotics

- Multi-Modal Sensors for Soft Robotics

On-Demand and Live Program

Invited Talks from research, available on-demand starting October 25th:

- Kazuhiro Shimonomura, Ritsumeikan University

- Joshua R. Smith, University of Washington

- Edward Cheung, National Aeronautics and Space Administration (NASA)

- Wenzhen Yuan, Carnegie Mellon University (CMU)

- Tamim Asfour, Karlsruhe Institute of Technology (KIT)

- Andrea Cherubini, Université de Montpellier and LIRMM

- Jan Steckel, University of Antwerp

- Christian Duriez, Inria

Invited Talks from industry, available on-demand starting October 25th:

- Toyoda Gosei (Sponsor of the WS)

- Blue Danube Robotics (Sponsor of the WS)

Panel Discussion + Q&A for Invited Speakers, October 28th:

3pm-5pm CET via ZOOM:

PhD-Forum, October 29th, 3pm-5pm CET via ZOOM:

Invited Speakers

Université de Montpellier

Le Laboratoire d’Informatique, de Robotique et de Microélectronique de Montpellier (LIRMM)

| Speaker |

Edward Cheung |

|

Invited Talk |

A Look at Sensor-based Arm Manipulator Motion Planning in 1980s |

| Abstract | This presentation is a look at the state of sensor-based motion planning for robotic systems back in the 1980s. It focuses on work that was done at Yale University led by Prof. Vladimir Lumelsky and his students. It continues by following the work of the author and includes an update of what has been done in the following years. |

| Speaker |

Tamim Asfour |

|

Invited Talk |

Multi-modal Sensing for Semi-Autnomous Graspin in Prosthetic Hands |

| Abstract | The development of anthropomorphic hands with multimodal sensor feedback is key for a robust grasping in robotics and user-centric behavior of prosthetic hands. In this talk, we present our recent work regarding the development of under-actuated, in size scalable anthropomorphic hands that integrate multimodal sensing including vision as well hand on-board computing and control. In particular, we present sensorized soft fingertips with in-palm and in-finger vision and show how methods and techniques from robotic grasping can be adopted for the realization of semi-autonomous control of prosthetic hands to simplify and improve grasping for the user. |

| Speaker | Joshua R. Smith |

|

Invited Talk |

A computationally efficient model of octopus sensing & neuro-muscular control

|

| Abstract |

This talk presents a simple, abstract model of soft robot arm kinematics and a closed loop distributed control scheme that efficiently produces odor-guided reaching behavior. Mechanically the arm is modelled as a muscular hydrostat: it consists of constant-volume cells. When muscular forces compress part of the cell, other parts of the cell expand to maintain constant volume. Using this simple mechanical model, we present a neuro-muscular control scheme that takes odor sensing as input and as output generates muscle commands that yield reaching behavior. This simple scheme efficiently controls an arm model with 36 actuated degrees of freedom; this control task that would be computationally expensive with a conventional planning-based approach. |

| Speaker | Wenzhen Yuan |

|

Invited Talk |

What Can a Robot Learn from High-resolution Tactile Sensing?

|

| Abstract | In this talk, I will introduce the design and working principle of a vision-based tactile sensor GelSight, which is able to measure the contact surface’s geometry with very high spatial resolution, as well as the traction field caused by shear force and torque. I will then discuss how the new high-resolution tactile information can extend robots’ perception capabilities by detecting slip during grasping, estimating the hardness and other physical properties of objects. |

| Speaker | Andrea Cherubini |

|

Invited Talk |

|

| Abstract | Traditionally, heterogeneous sensor data was fed to fusion algorithms (e.g., Kalman or Bayesian-based), so as to provide state estimation for modeling the environment. However, since robot sensors generally measure different physical phenomena, it is preferable to use them directly in the low-level servo controller rather than to apply them to multi-sensory fusion or to design complex state machines. The rationale behind our work has precisely been to use sensor-based control as a means to facilitate the physical interaction between robots and humans. |

| Speaker | Christian Duriez |

|

Invited Talk |

Model-based Sensing for Soft Robots

|

| Abstract | In this talk, our recent work on modeling for Soft Robotics is presented. This time, there is a special focus on sensing. Over the last years, we have concentrated on inverse problem solving for taks such as inverse kinematics. We have found that the solution is tractable at interactive rates, even if the deformable numerical model used to represent the robots has in the order of thousands of degrees of freedom. The inverse problems are addressed by formulating an optimization problem. As it turns out, it is also possible to use the same framework for sensing, i.e. formulating an optimization problem to find forces and deformations that best explain observed sensor values, such as volume changes, changes in cable length, etc. The result is the ability to use real world sensors to estimate the state of Soft Robotics devices and to close the loop for control. |

| Speaker | Jan Steckel |

|

Invited Talk |

Advanced 3D Sonar Sensing for Heavy Industry Applications |

| Abstract |

Man-made sonar has a poor reputation in the robotics community. To quote Rodney Brooks, a leading robotics researcher, in his seminal paper on robotics control in 1986, “Sonar data, while easy to collect, does not itself lead to rich descriptions of the world useful for truly intelligent interactions [...]. Sonar data may be useful for low-level interactions such as real-time obstacle avoidance.”. This quote shows the lack in faith that the robotics community has in sonar as a truly useful sensing modality for complex robotic tasks, which persists today after almost 30 years. However, bats show on a daily basis that the use of sonar through echolocation is indeed an enabler for complex interactions with the environment through the intricate interplay between the bat’s morphology, behavior and the physics of ultrasound propagation. Based on this existence proof, it is reasonable to assume that more complex and potent sonar sensors can be developed by mankind, invalidating the bad reputation of ultrasound as a sensing modality in the field of robotics. Based on over a decade of intense research on airborne 3D ultrasound sensing, our research group has developed eRTIS, an embedded real-time 3D imaging sonar sensor. The sensor is a powerful 3D ultrasound sensor based on microphone arrays and has been inspired by our research on the echolocation system of bats. In conjunction to this sonar sensor, we have developed a set of biologically inspired control laws that allow the control of an autonomous robot to navigate complex indoor and outdoor environments, using solely ultrasound as a sensing modality. To complement the set of autonomous control laws we have developed a SLAM algorithm (Simultaneous Localization and Mapping) called BatSLAM, which constructs topological maps from the environments using a bio-inspired model of the mammalian hippocampus. The eRTIS sensor and its accompanying algorithms are currently being evaluated by various industrial entities in a wide range of autonomous vehicle markets, ranging from autonomous cars, over mining, construction equipment and agriculture to navigation aids for blinds and autonomous wheelchairs. In this talk, I will outline the engineering process that has been followed from gaining insights in bat echolocation and translating these insights into the imaging sonar sensors which our research group has developed. Finally, I will outline some of the application potentials for 3D sonar in real-world industrial applications and conclude with our future endeavors into further improving 3D airborne ultrasound sensing. |

| Speaker | Kazuhiro Shimonomura |

|

Invited Talk |

|

| Abstract |

|

| Speaker |

Genesis Laboy (Toyoda Gosei) |

|

Invited Talk |

e-Rubber and its Applications |

| Abstract |

We have used our expertise of material technology to develop a next-generation Dielectric Elastomer Actuator/Sensor that could potentially replace the electromagnetic motor. Our e-Rubber sensor has the advantage of a unique output range that most sensors cannot achieve while also being lightweight, high displacement, silent, thin, and flexible. Join us to learn how it compares to other existing motors and how its already being used in industries such as robotics, healthcare, medical training, and VR/AR. |

| Speaker | Micheal Zillich (Blue Danube Robotics) |

|

Invited Talk |

Combined Proximity and Tactile Sensing for Fast Fenceless Automation |

| Abstract |

There is a growing need for collaborative, or fenceless applications with heavy payload robots. Especially with those heavier robots (large moving mass at high velocity) users are faced with the problem how to meet safety standards while maintaining efficiency. Proximity sensing or 3D vision seem promising but have issues with blind spots. Tactile sensing using our pressure sensitive safety skin AIRSKIN can cover the entire robot but needs to limit the robot speed to guarantee safe stopping distances.A combination of these two approaches thus seems a straight forward solution, but this needs to be validated from a safety and efficiency standpoint. In my talk I am presenting a use case study for palletizing, a typical task for a high reach, heavy payload robot. We implemented a two step safety concept, where proximity sensing using a laser ranger slows the robot to a safe speed, while the but keeps going, and tactile sensing then only stops the robot if there is an actual contact. We can show that this combination of available certified safety technology already allows efficient and safe fenceless palletizing. Further improvements are expected with ongoing advances in proximity sensing, such as smaller highly integrated radar solutions. |

Video Session

|

List of Complementary Video Material (Demos) |

||

|

Name/Institute |

Description of Research or Technology |

External Link |

|

Research Participants |

||

|

Christian Duriez (Inria Lille) |

Model-based Sensing for Soft Robotics |

- A Model-based Sensor Fusion Approach for Force and Shape Estimation in Soft Robotics: - FEM-Based Kinematics and Closed-Loop Control of Soft Robots: Inverse kinematics of soft robots with contact handling: |

|

Edward Cheung (NASA) |

Yale Sensor Skin (whole arm collision avoidance, teleoperation, etc.) |

- Yale Sensor Skin: |

|

Andrea Cherubini (LIRMM) |

Multi-modal Perception for physical Human-Robot Interaction |

- Haptic object recognition using a robotic hand: https://www.youtube.com/watch?v=GaGHZXHBDG4&feature=youtu.be - Collaborative dual arm manipulation: |

|

Tamim Asfour (KIT) |

Multi-modal Sensing for Semi-Autonomous Grasping in Prosthetics Hands |

- Sensor-Based Semi-Autonomous Control of Prosthetic Hands: |

|

University of Klagenfurt and Joanneum Research ROBOTICS |

Radar Sensors in Collaborative Robotics |

- Radar Sensors in Collaborative Robotics: |

|

TU Chemnitz |

Gripper with Proximity Sensors Collision Avoidance with Proximity Sensors |

- Collision Avoidance with Proximity Servoing for Redundant Serial Robot Manipulators: https://www.youtube.com/watch?v=M8pmUbULYOQ - TUC RHMi 3F Gripper

|

|

CoSys Lab |

3D Sonar |

- Predicting LiDAR Data from Sonar Images: - High speed video with acoustic intensity overlay of a bat (A. Pallidus) capturing a scorpion: - eRTIS Live Demonstration @ IEEE Sensors 2019:

|

|

Kazuhiro Shimonomura (Ritsumeikan University) |

Vision-based tactile sensor |

- Robotic bolt insertion and tightening based on in-hand object localization and force:

|

|

Industrial Participants |

||

|

Toyoda Gosei |

e-Rubber |

- e-Rubber product page (including videos): |

|

Blue Danube Robotics |

TBD |

- BDR homepage: https://www.bluedanuberobotics.com/ - BDR YouTube Channel: |

PhD Forum

- October 29th, 3pm-5pm CET via ZOOM

- 7am-9am PDT

- 10am-12pm EDT

- 11pm-1am JST (Japan)

|

Speaker |

Affiliation | Title |

| Hosam Alagi | Karlsruhe Insitute of Technology | Versatile Capacitive Sensor for Robotic Applications |

| Yitao Ding | Chemnitz University of Technology |

Proximity Perception for Reactive Robot Motions |

| Francesca Palermo | Queen Mary University of London | Robotic visual-tactile multi-modal sensing for fracture detection |

| Christian Schöffmann & Barnaba Ubezio | University of Klagenfurt & Joanneum Research ROBOTICS | Radar Sensors in Collaborative Robotics |

Main Organizers:

Co-Organizers: